Google algorithm updates: the main search engine updates at a glance

Google is constantly changing their algorithm. For webmasters its important to know, when and what the search engine changed with the newest algorithm update. But why does Google change its algorithm? The basic aim of the search engine’s algorithm changes is to make search results better and more relevant for users (ranking of the websites), but it isn’t known in advance which parameters Google plans to change or which steps webmasters have to take. We have provided you with an overview of the most important algorithm updates, their consequences, and how much they impact on your website.

Current algorithm updates at a glance

When was the last Google algorithm update (data refresh)? Who was affected and what were the consequences in the search results? These questions are answered in the following overview on the latest updates.

May 4 2020 - May 2020 Core Update

In spite of the Corona crisis, Google has not ceased work on its algorithm. Google announced its “May 2020 Core Update” on May 4th 2020. Just a few hours later the update was rolled out, although according to Google it could take up to two weeks until the effects are noticeable in all areas. Just like the last major Google updates, the May 2020 Core Update was officially announced by Google on Twitter.

13.01.2020 - January 2020 Core Update

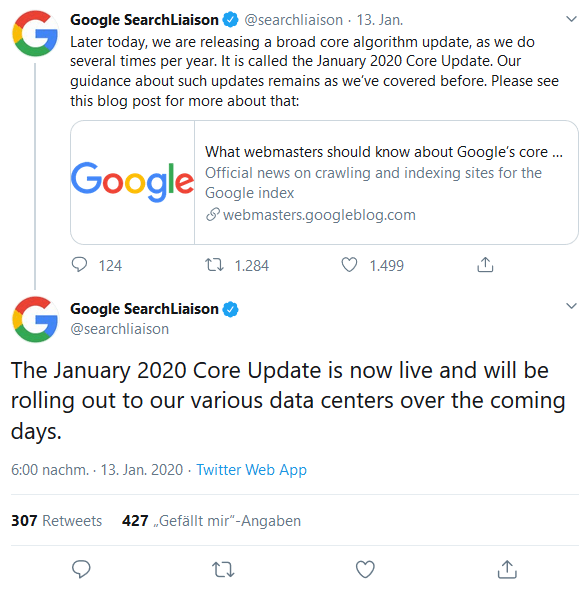

We weren’t even two weeks into 2020 when Google surprised webmasters with a new core update. Google announced their new “January 2020 Core Update” on the official Google SearchLiaison Twitter account:

All previous, general information on Google core updates also applies to the January 2020 Core Update.

25.10.2019 - BERT Update

After all the core updates this year, Google still has one more up its sleeve for the end of 2019. With the BERT update, Google will introduce one of the biggest changes to the search system since the introduction of RankBrain around five years ago. The BERT update was officially announced by the company in a blog article. BERT is an abbreviation of Bidirectional Encoder Representations from Transformers and is a neural network-based technique for Natural Language Processing (NLP) pre-training. The search engine company already introduced this technology back in November 2018 in one of its blog posts as well as releasing the technology as Open Source.

The aim of BERT is for user queries to be understood even better. For example, when a user enters a word, its context should be recognized and taken into account. In order for this to work, prepositions such as “for” or “to” will become more the focus of algorithmic analysis and evaluation.

Google will first introduce the BERT update for the English language, but more languages will follow. According to Google, the update is already expected to deliver significantly better results for one in ten queries in the USA. It may sound like a small amount, but it’s an enormous step forward. You can find some examples in the blog post.

In addition to the normal search results, Google is also planning to use the BERT update to improve featured snippets, i.e. the highlighted short answers that are occasionally found above the normal search results. According to Google, the BERT update will deliver better results in over 20 countries with the company explicitly mentioning the Korean, Hindi, and Portuguese languages.

Even though Google sees the new BERT update as a big step forward in improving its users’ speech understanding, the company is aware that many search queries still don’t deliver the perfect result. The company announced, however, that speech processing still has a way to go and that further improvements can be expected over time.

09/24/2019 – September 2019 Core Update

After Google began actively communicating its major core updates, webmasters were eager to see when Google would roll out the next update. About three months after the last update, the time came to release the “September 2019 Core Update”, the search engine’s third core update for 2019. Google is thereby maintaining a rhythm of one update per quarter for the current year. The company announced the latest core search update via its official Twitter account for webmaster communication - “Google SearchLiaison”:

Later today, we are releasing a broad core algorithm update, as we do several times per year. It is called the September 2019 Core Update. Our guidance about such updates remains as we’ve covered before. Please see this blog for more about that: https://t.co/e5ZQUAlt0G

— Google SearchLiaison (@searchliaison) 24. September 2019

As is the case with previous updates, Google does not comment very specifically on the changes that accompany the update. More information can be found on the Webmaster Central Blog, which is intended to serve as a guide for all those affected by the updates. The SEO tool developer Sistrix recognized that travel sites were most affected when conducting the first analysis for Germany and Spain. Websites from the healthcare industry were also affected, just like with the last major Google update.

03.06.2019 - June 2019 Core Update

The latest Google update for 2019 revealed one thing in particular: The search engine is increasingly trying to be somewhat more transparent. Google not only announced the core update a day earlier, but also gave the update an official name: "June 2019 Core Update" – very rare behavior from Google.

Tomorrow, we are releasing a broad core algorithm update, as we do several times per year. It is called the June 2019 Core Update. Our guidance about such updates remains as we’ve covered before. Please see this tweet for more about that:https://t.co/tmfQkhdjPL

— Google SearchLiaison (@searchliaison) 2. Juni 2019

By providing this information, the company decreases the amount of speculation, but as always, Google still remains reluctant to provide further information about the update. The search engine giant chooses not to reveal which screws have been tightened on the algorithm and how webmasters can prepare for it.

12.03.2019 - March 2019 Core Update

On March 12, Google confirmed the first official update for 2019. As with the last updates confirmed by the company, this was a core update. Google named the update the “March 2019 Core Update”. Other confusing names such as “Florida 2 Update” had circulated within the Webmaster scene, and accordingly Google chose a distinct name for this new update. The current update, according to Google has nothing to do with the Florida update:

We understand it can be useful to some for updates to have names. Our name for this update is "March 2019 Core Update." We think this helps avoid confusion; it tells you the type of update it was and when it happened.

— Google SearchLiaison (@searchliaison) 15. März 2019

Seeing as this is another core update, Google’s recommendation on how to react to the update as a site operator has not changed from previous advice (see below, in the post).

According to the search engine representative Danny Sullivan, this is already the third core update since Google started officially confirming major updates. It was announced worldwide, and the rollout should last about a week or more.

08.10.2018 – Medic update 2

In mid-August, the Medic update, with its misleading name, caused a stir in the search results pages. Some websites lost up to 80% of their visibility. But where there are losers, there are also winners and it’s no different in the search results. Many webmaster were able to increase the visibility of their website tremendously.

Google merely stated that there would be an update, but the company didn’t reveal which changes had been made to the algorithm. The big mystery began when website operators tried to work out what had changed or what they could do if they had been affected. Further information can be found in the following section on the “Medic update”.

The new update happened around August 8, 2018 and was simply called Medic update 2 in the industry (even though the name doesn’t really apply, it was retained for the sake of simplicity). With this update, Google seemed to partially reverse or reduce the changes that arose from the first Medic update.

As with the first Medic update, websites with sensitive topics (e.g. health topics, credit, insurance, etc.) were particularly affected. Interestingly, websites whose webmasters had not taken any decisive measures were also recovered. The Medic update 2 shows once again that Google does make mistakes and tries to undo them with further updates. As a webmaster, you shouldn’t just presume that this is going to happen though.

29.09.2018 – Small core algorithm update

After the Medic update led to a few losers, but also some winners, the next smaller update confirmed by Google took place around the 29.09.2018. In the confirmation tweet from Danny Sullivan, he once again confirmed that the Google core algorithm is updated all the time. Even though Sullivan spoke about a “smaller” update, webmasters saw sharp movements in their website’s visibility index.

13.08.2018 – Medic update

To set the record straight, the Medic update doesn’t live up to its name. However, it once again illustrates how difficult and misleading the external analysis of a Google update can be. The name “Medic update” comes from the SEO industry (see for example at Barry Schwartz – Search Engine Roundtable - for more insights in the seo community), which found out after the first few analyses that medical websites were especially affected by this ranking algorithm update. But Google officially denied this theory by having webmaster trends analyst, Gary Illyes, announce on the stage of Search Masters Brasil that websites from the health sector in particular had been hit only by a “happy coincidence”. A few days earlier the official voice for Google, Danny Sullivan, confirmed that this update is a “broad core update”, which affects “all search queries”:

It's a broad core update which means it is involving all searches.

— Danny Sullivan (@dannysullivan) 14. August 2018

Even if the term “Medic update” is somewhat misleading, the presumption could apply to the update nevertheless: this applies in particular to websites where, among other things, the trust of the visitor plays an important role. Google summarizes this criteria under the abbreviated EAT, which stands for Expertise, Authoritativeness, and Trustworthiness. These three criteria are particularly relevant for websites with YMYL content, which stands for Your Money or Your Life, and includes websites that can influence the finances, health, satisfaction, or safety of the person surfing, due to the information they reveal.

If you are affected by the Medic update, you should check the official Google Quality Rater Guidelines. This internal guide is intended to give human Quality Raters some guidelines on how a perfect website should look and be built from Google’s point of view. When it comes to health topics, for example, it should be clear who the author of the article is and what professional qualifications they have.

01.08.2018: Core algorithm update

The third core algorithm update in 2018 took place on 01.08.2018. Google officially confirmed on Twitter that this is a “broad core algorithm update”. At the same time, the tweet referred to the previous hints on how website operators should react to updates like these (have a look at the tweets further down in the article). Also the Google representative confirmed again on Twitter that the latest ranking shifts are an algorithm update. He referred to the following tweet:

This week we released a broad core algorithm update, as we do several times per year. Our guidance about such updates remains the same as in March, as we covered here: https://t.co/uPlEdSLHoX

— Google SearchLiaison (@searchliaison) 1. August 2018

In this update, Sullivan also reiterated that, in principle, website operators would be told about certain updates if they could change something specific. In this case, however, the site operator cannot change anything in particular to make up for any losses in ranking. Google wants to prevent webmasters from trying to “repair” things that aren’t broken.

When we have updates where there are specific things that may help, we do try to tell you that. With these, there is nothing specific to do -- and we do think it's actionable to understand that, in that hopefully people don't try fixing things that aren't really "broken" ...

— Danny Sullivan (@dannysullivan) 1. August 2018

He also stressed that the key to success is offering great content (high quality of the content), but he also admitted that this time it was the “same boring answer” as it had been so many times before.

16.04.2018: Core algorithm update

After a major core algorithm update in March of this year, Google also added more in April. The search engine operator announced via Twitter that on Monday 16.04.2018, another major core algorithm update was released. For background information, the company referred to the tweet from the last search algorithm update in March.

On Monday, we released a broad core algorithm update, as we routinely do throughout the year. For background and advice about these, see our tweet from last month: https://t.co/uPlEdSu6xp

— Google SearchLiaison (@searchliaison) 20. April 2018

07.03.2018: Core algorithm update

The last major Google update was on March 7, 2018. Via the official Twitter account @searchliaison, the search engine giant confirmed on March 12, that the assumptions of webmasters were true and that there had been a major update in the past few days:

Each day, Google usually releases one or more changes designed to improve our results. Some are focused around specific improvements. Some are broad changes. Last week, we released a broad core algorithm update. We do these routinely several times per year....

— Google SearchLiaison (@searchliaison) 12. März 2018

This so-called core algorithm update is a major update that Google only carries out a few times a year in this form. The company is making a change right at its heart: the core of the algorithm. Accordingly, many websites are affected by core updates like these and the effects are noticeable and visible worldwide. Google remains silent about the exact changes that have been made. It is therefore almost impossible for website operators to react to this update. In another tweet about the March update, Google merely explains that previously undervalued websites are benefitting from the update. For all those disadvantaged by the update, who have been struggling with declining visitor numbers and reduced visibility since March 2018, Google has a message for them: nothing is wrong with these sites. There is also no “fix”, although you should focus on providing great content.

There’s no “fix” for pages that may perform less well other than to remain focused on building great content. Over time, it may be that your content may rise relative to other pages.

— Google SearchLiaison (@searchliaison) 12. März 2018

There is no official name for the update that happened on 07.03.2018, compared to updates of the past years.

In the Webmaster Hangout from 06.04.2018, John Mueller explained in concrete terms that the focus of the update was not so much the quality, but more the relevance. In order to increase the relevance of your own site, Mueller advises users to survey what could be done differently to improve the website. Also, you should always check the technical details, for example, whether the page can still be crawled by the Googlebot.

To display this video, third-party cookies are required. You can access and change your cookie settings here.

To display this video, third-party cookies are required. You can access and change your cookie settings here. The main Google algorithm updates of recent years

Experts have counted more than 40 updates in Google’s algorithm since 2009. We will introduce the 5 updates that have had the greatest impact for website operators.

2011: Panda update

The first rollout of the Panda update took place in February 2011. Panda was originally designed to be a regular filter, but in the meantime it’s become a fixed component of the Google Core algorithm. Even though Panda isn’t active in real-time, it ensures continuous quality assurance of the search results.

2012: Penguin update

The Penguin update is often referred to as the webspam update since its name is the primary objective of the algorithm change, which is to stem the amount of spam in the search results in order to improve the relevance and quality of the results.

Google sees webspam as an unclean practice that is implemented to artificially improve the search engine ranking. A website that has only been optimized for the search engine and not for the user shouldn’t appear among the top search results. The task of the Penguin is to identify unnatural site optimization and curb any deliberate manipulation.

2013: Hummingbird update

Google rolled out the Hummingbird update in August 2013, just in time for the search engine’s 15th anniversary. For many experts, this was the weightiest edit of the search algorithm since 2000. It wasn’t just the ranking algorithm that was changed with the Hummingbird update, but rather the entire search algorithm. This update gets its name from the characteristics of the bird: speed and precision, which is how the Google search should be.

2014: Pigeon update

In 2014 the Pigeon update signified the biggest change in the Google universe. With Pigeon, the search engine company wanted local search mechanisms to be tied more closely to its general algorithm and so be able to deliver more useful and relevant results. The user’s location became an even more important part of the search, and the importance of Google+ and Google My Business profiles also increased. This also meant that local small and medium-sized businesses had a chance to win new customers through Local SEO.

The Pigeon update had an important influence on Google Maps as well as on the normal search engine results, although the effects weren’t comparable to those of other updates. But it still had a clear impact since there was a change in many businesses’ strategies after the rollout of Pigeon. Local small and medium-sized businesses as well as large companies with different locations began to focus more on local search engine optimization.

2015: Mobile Friendly update

Until 2015 mobile sites were just exact copies of desktop versions. In April of the same year, Google confirmed the Mobile Friendly update, which has also been referred to as 'Mobilegeddon'. Since then, the search has become more independent and takes into account the usability of mobile websites and apps. This algorithm change only affects mobile searches.

Google updates: a calculation with many unknowns

Google updates are simultaneously a blessing and a curse. A curse for website operators who get penalized and are still reeling from the penalty months later. A blessing due to the continual improvements and enhancements for the user as Google strives to make itself even better and easier to use.

The big challenge, even for experienced SEO specialists, is that there are many unknowns regarding Google. The search engine giant often performs algorithm changes without giving concrete details of what’s happening. These unnamed updates are often referred to as phantom updates. The effects are noticeable and the causes are a mystery. This also won’t change in the future and further updates (whether official or phantom) ensure that SEO is constantly changing and websites are constantly being optimized.